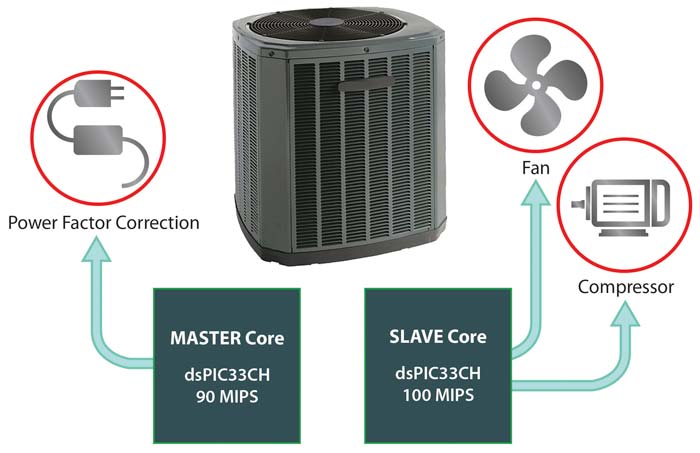

A representative example is advanced power-supply design. Today’s implementations do not only call for precise, efficient control of energy conversion through mathematical algorithms and real-time pulse width modulation (PWM) control. They also require connectivity to relay real-time operational status and receive commands from a system-level management unit using protocols such as PMBus. Similarly, in an automotive fan or pump controller, communication through a protocol such as Controller Area Network Flexible Datarate (CAN-FD) is needed for commands, system monitoring and diagnostics reporting. An air conditioning unit may have even more complex requirements, with separate DSP-oriented tasks to support power-factor correction in its mains power supply as well as closed-loop motor control in fan and pump units.

A representative example is advanced power-supply design. Today’s implementations do not only call for precise, efficient control of energy conversion through mathematical algorithms and real-time pulse width modulation (PWM) control. They also require connectivity to relay real-time operational status and receive commands from a system-level management unit using protocols such as PMBus. Similarly, in an automotive fan or pump controller, communication through a protocol such as Controller Area Network Flexible Datarate (CAN-FD) is needed for commands, system monitoring and diagnostics reporting. An air conditioning unit may have even more complex requirements, with separate DSP-oriented tasks to support power-factor correction in its mains power supply as well as closed-loop motor control in fan and pump units.

In principle, a single high-speed CPU core can, through time-slicing, run many independent threads to handle both low-latency real-time control tasks along with networking and system management tasks. However, a core that is designed to achieve such high performance in any given process technology may be suboptimal in terms of power consumption and complexity.

A further issue for any real-time application running on a single core is how easily threads and interrupt handlers will meet their respective deadlines. With any resource-sharing implementation, a concern is the length of time that a given thread will be blocked from running by an unrelated process or interrupt handler. To guarantee that a thread will meet its deadlines under all conditions where threads have no interdependencies, conservative algorithms used to calculate the amount of headroom required will call for leaving a relatively significant portion of processing cycles unallocated.

There is also the overhead of frequent task switching that needs to be considered and its impact on processing throughput. With a large number of interrupting events to a single core, the overhead of interrupt handling and the associated task switching can be significant.

One option is to build more headroom for performance through even higher clock speeds. In practice, it can make much more sense to divide the application across more than one processor core. For any multitasking application that is not primarily dependent on the throughput of a single thread, using parallelism often leads to greater energy efficiency, greater determinism and easier development.

A dual-core implementation can share the workload of a multitasking system more effectively. It can also result in being able to use lower core clock frequencies which can be a better match for flash memory, reducing or eliminating the number of stall cycles (wait states) during which the processor has to wait for instructions or data to return from a fetch request.

In some applications the closely coupled nature of tasks that handle related data feeds still favour a single pipeline. But when different functions are being executed in a high-performance embedded application, using more than one core makes more sense as the various functions are relatively loosely coupled.

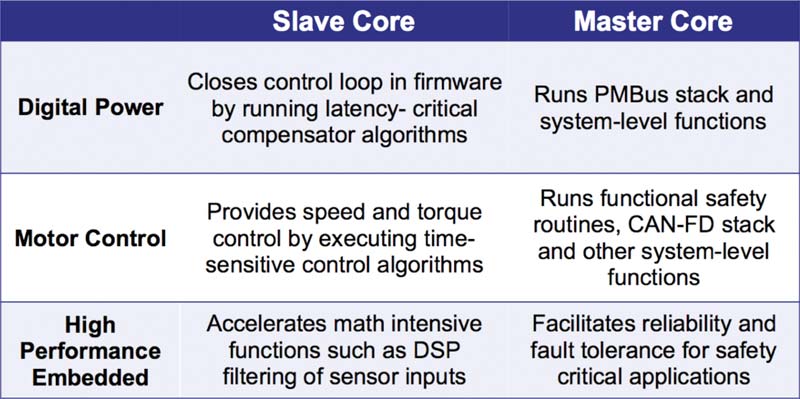

For example, in a power supply where the closed-loop control is implemented in firmware, the performance is predominately determined by the time it takes to convert an analog sample to digital, calculate a new duty cycle from that data, and then update the PWM. With a multi-core controller, it is possible to ensure this latency-critical function is not impeded by other system activities by running it on a core that has no other priority tasks to perform. In parallel with the time-critical control loop calculations, another CPU core can be tasked with other responsibilities such as PMBus communications and system monitoring functions. Similarly, in a motor control application, splitting the control loop processing and the CAN interface stack across different cores ensures that the motor’s commutation is precise and deterministic.

There is a further benefit to split processing in terms of project development time. However, it is important that the two cores are homogeneous to take advantage of this. One traditional option for multiprocessing was to divide the workload according to processor type.

Signal-processing code would be dedicated to run on a pipeline optimised for multiply-accumulate options but with little ability to run control code efficiently while a general-purpose processor took care of branch-intensive routines. In practice, in many real-time applications, this is a difficult architecture to work with.

The signal-processing operations often depend on external conditions that may change rapidly. The interprocessor communications needed to synchronise states across the different cores can be complex to implement because they impose tighter timing synchronisation requirements than messages used to relay commands and status updates to a network interface.

Unified digital signal controller architectures such as Microchip’s dsPIC33 overcame the synchronisation problems by bringing the two types of execution behaviour together into a single architecture. Such a pipeline can stream multiply-accumulate and matrix operations at high speeds but offers fast branching capability and high responsiveness to interrupts so that parameters and algorithms can adapt to changing conditions on the fly. This eases the software implementation of complex signal-processing algorithms.

However, pressure on design times means customers face challenges of code integration no matter which architecture they choose. The combination of communication and control functionality in many applications is often split between development teams, each of which is a specialist in their area.

A key issue with integrating the code from two or more teams is determining how scheduling and task prioritization will work between them. Seemingly small decisions such as the priority of individual tasks can have a major impact on the overall real-time behaviour of the application.

A poor decision will mean vital tasks will be locked out of access to the processor for longer periods than is desirable for good performance. By having the task sets distributed across two processors, the engineers with the most knowledge about the relative priorities of the threads their part of the application uses are responsible for setting those priorities.

Split processing also allows for easier management and allocation of data memory and can be sure that make files and linker settings they have created and debugged during their project remain in place in the final software package. This reduces the overhead on the software-integration team and reduces time to market.

Although split processing already helps optimise both development effort and processing throughput, Microchip continues to make architectural improvements to help increase performance as well. An example in the dual-core dsPIC33CH is the deployment of an increased number of context-selected registers to boost interrupt responsiveness. Also implemented in the new dsPIC33CH core are additional instructions to increase DSP performance.

As a Digital Signal Controller (DSC), the dsPIC33CH includes a number of advanced peripherals to reduce system costs and board size. They include high-speed ADCs, DACs with waveform generation, analogue comparators, analogue programmable gain amplifiers and high-resolution PWM generators with resolution down to 250ps. Advanced features such as more intelligent peripherals and a peripheral trigger generator help reduce the number of interrupts a core is subject to in a power-supply or motor control application. For example, the UARTs provide hardware support for LIN/J2602, IrDA®, DMX and smart card protocol extensions to reduce software overhead. Likewise, the CAN-FD peripheral includes a bit stream processor and programmable automatic retransmission to enable it to run more autonomously from the CPU core.

With a design focused on the development requirements of today’s engineering teams, Microchip’s dsPIC33CH is optimised for high-performance and time-critical, real-world embedded-control applications. The architecture provides the support customers require to “design separately, integrate seamlessly.”

The result is an architecture that increases performance while reducing time to market and system size and cost.

Author: Markus Wimmer,

Business Development Manager. 16-bit microcontroller business unit

Microchip Technology | https://www.microchip.com