Sensor fusion systems spend a significant amount of resources in predicting the future. Here is why this improves automated driving functions.

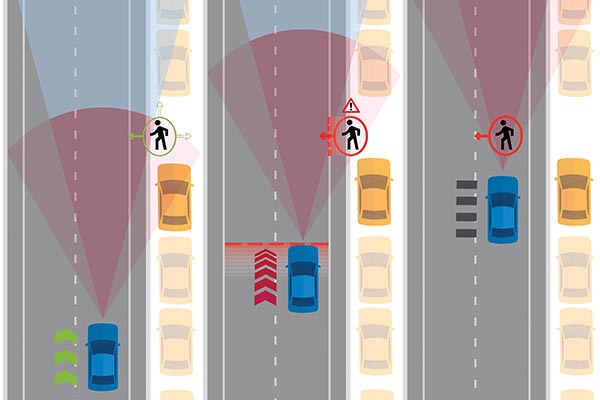

Challenging scenario for the protection of pedestrians inspired by the NCAP AEB test catalog. The pedestrian must be recognized as endangered before he or she enters the road so that the vehicle can initiate a harmless emergency stop. Predicting the pedestrian’s motion using different behavior assumptions is a crucial requirement for the sensor fusion system. (© BASELABS)

It is often stated that automated driving and advanced driver assistance systems (ADAS) need to correctly perceive the vehicle’s environment. In this context, we frequently see camera images with object detections that come from sophisticated artificial intelligence (AI) based detection methods typically visualized by rectangular bounding boxes as an overlay on top of the image.

From a human perspective, these image detections often give the impression that the problem is solved and that a machine now could decide how the car should proceed. However, in contrast to currently available machines, humans can extract much more context information from a single image. For example, a human might understand that a pedestrian is rushing to a cab and that he or she will cross the street so that harsh braking might be appropriate.

For an automated driving function such as autonomous emergency braking (AEB) with its limited recognition capabilities to react similarly, it must not only know where an object is located right now but also where it will be in the near future — the object’s position needs to be predicted to this near future.

In this article, I will explain why prediction is not only used by the driving function itself, but has massive impact to the performance of the sensor fusion system that provides the environmental model.

Sensor Fusion in a Nutshell

In automated driving, the combination of multiple diverse sensors compensates for individual sensor weaknesses, e.g., a camera better detects pedestrians than a radar while a radar provides long-distance coverage. Converting the different sensor data into a uniform image of the vehicle environment is called sensor data fusion — or sensor fusion for short.

Sensor fusion is not a single monolithic algorithm. Instead, it assembles multiple algorithms whose selection and combination depend on the sensor setup and the driving function.

However, the main task of any sensor fusion system is to compare the sensor observations or measurements with the system’s expectation of these measurements. From the differences, the system’s state gets adapted or updated.

An example: A vehicle is driving in front of us. Let’s assume the sensor fusion system already knows that the vehicle is approximately 50 meters away and 10 m/s slower than us. If a sensor observes that vehicle 100 milliseconds later, the sensor fusion system could expect the vehicle at 49 meters using simple kinematics s = s₀ + v∙t — the so-called motion model. However, if, in reality, the vehicle was 15m/s slower than us, the observed distance would be 48.5 instead of 49 meters. From the difference 49m — 48.5m = 0.5m, the sensor fusion may conclude that its initial velocity estimation was “wrong” and should be around 15m/s = 10m/s + 0.5m/0.1s instead — the system’s state gets updated.

To perform the described update step, sensor measurements need to be associated with already known objects or so-called tracks. For this association, all tracks are predicted to the time of the measurements using the motion model. Then, each measurement is associated with the track whose prediction is closest to the measurement, and the track gets updated with that measurement.

The prediction quality is essential for the association and thus for the overall sensor fusion performance..

Suppose the association fails to find a measurement close to a predicted track, or it selects a track the measurement does not belong to due to an unsuitable prediction. In that case, tracks either won’t get updated or get updated using the wrong measurement, which in turn leads to invalid track states like a wrong track position. Often, such incorrect association leads to a sensor fusion failure and incorrect driving function behavior. Let’s see how we can avoid this.

The Right Number of Models

While the example’s motion model seems obvious, other models might be more appropriate:

- If the sensor fusion needs to support turning vehicles, models that include the curvature should be considered, e.g., the so-called constant curvature and acceleration (CCA) model.

- If the same sensor fusion system also needs to support pedestrians, a motion model with a higher degree of freedom could be used, e.g., the constant velocity (CV) model.

- For bicyclists, the CCA model might be used as well. However, the model parameters probably differ from the parameters used for vehicles.

Using a separate model for each object class can significantly increase the sensor fusion performance as track predictions get better and thus, the association gets better.

Unfortunately, the class of an object is often not reliably known to the sensor fusion system if it is a “new” object, e.g., an object that enters the sensor’s field of view. Imagine the radar of a system detects the new object first (and does not provide classification information). Then, the system cannot decide on a single motion model as this could be wrong and, thus, would again lead to wrong predictions and associations.

Instead, the sensor fusion should create multiple hypotheses, one for each class the system should support, e.g., one hypothesis assuming that the object is a vehicle using the CCA model and one hypothesis assuming that the object is a pedestrian using the CV model. Then, all of these hypotheses can be used to predict the track’s state.

With such a Multiple Model approach, it becomes more likely that the correct measurements get associated with the track.

Over time, when more measurements arrive, the sensor fusion system should resolve the model ambiguity as soon as possible to save compute and memory resources. There are several options on how to get rid of invalid hypotheses:

- The actual motion of the object may not fit a track hypothesis. In such case, measurements may appear in large distance to the hypothesis. Sensor fusion systems with integrated existence estimation decrease the existence probability of hypothesis whose measurements are unlikely. If the existence value drops below a specific value, the hypothesis gets removed.

- The object’s class is determined by a sensor like a camera at some point in time. Hypotheses that belong to other classes can be removed.

Modern sensor fusion systems have to handle even more hypotheses due to a higher level of ambiguity. A typical challenge in urban areas is that the objects’ driving direction or heading often cannot be reliably derived from the first frames after initial detection. If the “wrong” heading value is used in the motion model, the prediction gets wrong again. To overcome this, multiple hypotheses with different heading values are created, and the most likely hypothesis will survive in the sensor fusion system.

Handling all hypotheses correctly and efficiently is a complex task that needs to be addressed by modern sensor fusion systems.

In addition to dedicated motion models per object class, the detection and measurement models should be class-specific. By this, the sensor fusion can consider class-specific sensor characteristics, and better measurement predictions can be determined.

The performance of sensor fusion systems depends on their capability to predict different object classes. In particular, sensor fusion systems should support:

- class-specific motion models using multiple model approaches to account for different object behaviors,

- to initialize tracks using multiple hypotheses to cope with initialization ambiguities,

- to apply different sensor models depending on the object class,

- to efficiently handle the hypotheses to save CPU and memory resources.

Author:

Dr. Eric Richter, Director of Customer Relations and co-founder BASELABS

BASELABS