Implementing MCUs which combine graphic display controllers, with support for capacitive-touch, touch-screen and USB peripherals.

by Rishi Vasuki, Product Marketing Manager, Advanced Microcontroller Architecture Division, Microchip Technology

HMI designs in embedded systems are evolving fast as the cost of manufacturing fashionable and more elegant interfaces continues to decrease. Some applications are already combining touch-sensitive interfaces, such as keys, sliders, touch-screens and haptic feedback, with rich graphical displays using the latest generation of microcontrollers (MCUs) which integrate graphic display controllers, with peripherals for implementing capacitive-touch, touch-screen controllers and USB onto a single chip.

The new MCUs promise to combine lower system cost, with a wider range of options for higher system integration. However, while they enable designers to lower the cost of system hardware, manufacturing and inventory, the increased complexity of software development can impact on time to market and demands a robust integration of touch-sensing and other human-interface functions onto a single MCU.

First, consider the origin of these concerns. Take, for example, capacitive touch-sensing. When touch-sensitive keys were first introduced, designers soon realised that they were not as simple to implement as traditional push-buttons.

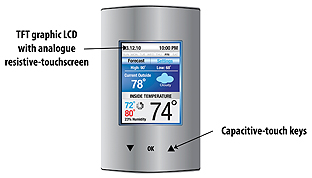

Touch-sensitive keys have to be handled in the same way as analogue sensors: Radiated noise, or noise that may be conducted from environmental sources such as common appliances, compact fluorescent lamps, power supplies, cell phones and motors, needs careful management. To achieve robust and responsive keys, software techniques such as envelope detection, filtering, de-bounce and slew rate filters have to be applied, in addition to ensuring a good layout for signal acquisition. Now, the need to refresh a segmented or graphical LCD, when a user input is received, must be added to this human-interface system. Rendering graphical constructs, such as geometrical shapes or text, onto a display, such as a TFT or OLED, has historically needed processor bandwidth. Also, consider human-interface applications that incorporate touch-screen input in addition to the graphics display and the touch-sensitive keys, such as the thermostat in Figure 1. Finally, a communication interface, such as USB, will typically be required.

The challenge, therefore, is to enable real-time processing of the user inputs derived from touch-sensitive keys, a touch-screen sensor and USB data communication as well as updating the display. The solution falls into two categories which are central to the underlying hardware and software.

Hardware implementation

There are a number of MCUs that combine an LCD controller and touch-sense peripheral on a single chip but, typically, the LCD controller drives a segmented display rather than a graphics LCD.

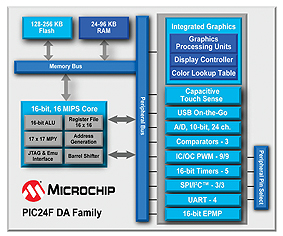

The latest generation of MCUs, such as the PIC24FJ256DA210, shown in Figure 2, takes integration to a new level by combining a graphical display controller, a USB 2.0 On-The-Go peripheral and a special analogue peripheral that can be used for touch-sensing. To support graphics displays, the PIC24FJ256DA210 has a built-in colour look-up table, a large 96KB RAM, a graphics processing unit (GPU) and a direct interface to STN, TFT and OLED displays. The large on-board RAM allows 256-colour graphics data to be stored, at 8-bits per pixel, for a 320×240 QVGA display within the on-chip RAM. Colour palettes, used in the colour look-up table, can also be switched to use a different set of 256 colours across different image frames. The GPU allows simple objects, such as lines, rectangles, ASCII text and PNG-like image decompression, to be rendered by issuing a single command.

This reduces the CPU overhead to 0%.

Figure 2 also shows the Charge Time Measurement Unit (CTMU) analogue peripheral. Capacitive touch-sensing is one of many applications supported by the CTMU peripheral. The CTMU provides a constant current source with a timer that can be used to charge a sensor pad. The voltage on the pad can be measured by the on-chip Analogue-to-Digital Converter (ADC). When a user’s finger is placed on the pad, the capacitance change at the sensor pad is recorded as a change in voltage by the ADC. In the simplest implementation, each ADC channel can be connected to a touch-sensitive key input. With 24 ADC channels, the PIC24FJ256DA210 provides sufficient capacitive-touch channels to cover the needs of most applications.

There is one further hardware consideration: If the application has both a resistive touch-screen input as well as touch-sensitive keys, for short-cut menu functions, the graphical LCD is overlaid with a resistive touch-screen sensor. If the touch-screen controller is integrated onto the main MCU, the touch-screen sensor outputs, which are typically 4 or 5 wires, may be connected to the MCU’s analogue channels. In this case, the ADC resource on the MCU is shared between the touch-sensitive key functions and the touch-screen function. The ADC measurements are used to estimate the X & Y coordinates sensed on the touch screen.

Software implementation

Typically, the firmware for the graphics display drivers and capacitive touch-sensing will be available as separate libraries. For an effective integration of these libraries, a main routine is required, functioning like a basic Real-Time Operating System (RTOS), to establish the priority and frequency of servicing each task. For tasks which share common hardware resources, the main routine also needs to establish a mechanism for non-destructive updating of the control and data registers for the shared resource, prior to switching between tasks. In the example above, both the touch-screen sensors and the touch-sensitive keys feed into the ADC. The rate of sampling the ADC, the channels being sampled and the number of samples needed, differ across the touch-screen sensor and the keys. Therefore it is necessary for the main routine to save these parameters before switching between the two tasks.

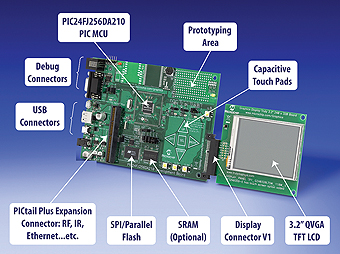

Since the user may, at any time, provide input to either the touch-screen or the keys, the main routine may need to use time-slicing to enable both sensors to be scanned frequently enough. The display may need periodic updates if, for example, the application is rendering animated graphics on the screen. If the display is only updated when the user makes menu selections, then there is no CPU resource contention between the touch-sensing and display-driver functions. As the example device (PIC24FJ256DA210) has dedicated graphics acceleration hardware, time-slicing between the touch and graphics functions is less of an issue. On this device, rendering a box, a line or ASCII text simply requires a single command to be issued by the CPU. A demonstration project that showcases the integration of the touch-sensitive keys, the touch-screen sensor and the graphics display, using underlying software libraries, is available for free download with the mTouch™ Capacitive-Touch library and can be run on the PIC24FJ256DA210 development board shown in the Figure 3.

There are other functions that may be integrated on a single chip, together with touch-sensing. For example, the CTMU peripheral can be used for temperature-sensing, medical instrumentation, time measurement or other functions. In an application such as a thermostat, it is possible to use the CTMU peripheral for temperature sensing, in addition to touch-sensing, by using an external diode. Since temperature measurement only needs to be carried out infrequently it is possible to share this peripheral across these two functions.

USB communications

Integrating USB with touch-sensing is relatively easy if simple rules are followed. When the application is connected to a USB host, it goes through an enumeration phase during which the CPU bandwidth may be largely dedicated to performing the USB function. Touch-sensing functions may be re-started in a couple of minutes, once the enumeration phase has been completed. Once enumeration is complete, the USB functions consume a very small amount of the CPU bandwidth, typically under 2%.

At this point, the main routine may choose to service the USB receiver function periodically, every millisecond or so, or switch to a more interrupt-driven approach.

Many applications with touch-sensitive interfaces have begun to incorporate haptic feedback. Integrating haptics into an application is more of a mechanical challenge. Typically, haptics require a simple Pulse Width Modulation (PWM) peripheral to drive a small vibrator or motor. It is conceivable that, in some applications, the on-chip PWM peripheral is also being used to drive an audio speaker. In such cases, an effective integration may require having separate time-bases for the PWM channel that is driving the haptics motor and the one driving the audio speaker.

Conclusion

Whilst single-chip integration of graphic display and touch-sensing features can lower system cost, software complexity can be a real factor in the time to market. Implementation is simplified by selecting an MCU platform which is supported by graphics, USB and touch-sensing software libraries that have been designed and tested to be inter-operable and where robust integration has been demonstrated.

www.microchip.com