In recent years, there has been an explosion in the numbers of connected IoT devices in markets as diverse as industrial automation, smart homes, building automation and wearables. These connected devices, or “things”, share one common trait – they all communicate with each other and share data generated by multiple sensors. A new forecast from International Data Corporation estimates that there will be 41.6 billion connected IoT devices, or “things,” generating 79.4 zettabytes (ZB) of data in 2025.

As the number of connected devices increases, so does the amount of data that is generated. This data can be collected and, in many cases, analyzed and used to make decisions on the devices themselves without need for connectivity to the cloud. This ability to analyze data, draw insights from it and make autonomous decisions based on analysis, is the essence of Artificial Intelligence (AI). A combination of AI and IoT, or the Artificial Intelligence of Things (AIoT), enables the creation of “intelligent” devices that learn from data and make autonomous decisions without human intervention. This leads to the products having more logical, human-like interactions with their environment.

There are several drivers for this trend to build intelligence on edge devices – increased decision making on the edge reduces the latency and costs associated with cloud connectivity and makes real-time operation possible. The lack of bandwidth to the cloud is another reason to move compute and decision making onto the edge device. Security is also a consideration – requirements for data privacy and confidentiality drive the need to process and store data on the device itself.

The combination of AI and IoT has opened up new markets for MCUs. It has enabled an increasing number of new applications and use cases that can use simple MCUs paired with AI acceleration to facilitate intelligent control. These AI enabled MCUs provide a unique blend of DSP capability for compute and machine learning (ML) for inference and are now being used in applications as diverse as keyword spotting, sensor fusion, vibration analysis and voice recognition. Higher performance MCUs enable more complex applications in vision and imaging such as face recognition, fingerprint analysis and autonomous robots.

AI Technologies

As discussed, AI is the technology that enables IoT devices to learn from previous inputs, make decisions, and adjust its responses based on new input, all without the intervention of humans. Here are some technologies that enable AI in IoT devices:

Machine learning (ML): Machine learning algorithms build models based on representative data, enabling devices to identify patterns automatically without human intervention. ML vendors provide algorithms, APIs and tools necessary to train models that can then be built into embedded systems. These embedded systems then use the pre-trained models to drive inferences or predictions based on new input data. Examples of applications are sensor hubs, keyword spotting, predictive maintenance and classification.

Deep learning: Deep learning is a class of machine learning that trains a system by using many layers of a neural network to extract progressively higher-level features and insights from complex input data. Deep learning works with very large, diverse and complex input data and enables systems to learn iteratively, improving the outcome with each step. Examples of applications that use deep learning are image processing, chatbots for customer service and face recognition.

Natural language processing (NLP): NLP is a branch of artificial intelligence that deals with interaction between systems and humans using natural language. NLP helps systems understand and interpret human language (text or speech) and make decisions based on that. Examples of applications are speech recognition systems, machine translation and predictive typing.

Computer vision: Machine/computer vision is a field of artificial intelligence that trains machines to gather, interpret and understand image data, and take action based on that data. Machines gather digital images/videos from cameras, use deep learning models and image analysis tools to accurately identify and classify objects, and take action based on what they “see”. Examples are fault detection on manufacturing assembly line, medical diagnostics, face recognition in retail stores and driverless car testing.

AIoT on MCUs

In times past, AI was the purview of MPUs and GPUs with powerful CPU cores, large memory resources and cloud connectivity for analytics. In recent years though, with a trend towards increased intelligence on the edge, we are starting to see MCUs being used in embedded AIoT applications. The move to the edge is being driven by latency and cost considerations and involves moving computation closer to the data. AI on MCU based IoT devices allows real-time decision making and faster response to events, and has advantages of lower bandwidth requirements, lower power, lower latency, lower costs and higher security. AIoT is enabled by higher compute capability of recent MCUs as well as availability of thin neural network (NN) frameworks that are more suited for resource constrained MCUs being used in these end devices.

A neural network is a collection of nodes, arranged in layers that receive inputs from a previous layer and generate an output that is computed from a weighted and biased sum of the inputs. This output is passed on to the next layer along all its outgoing connections. During training, the training data is fed into the first or the input layer of the network, and the output of each layer is passed on to the next. The last layer or the output layer yields the model’s predictions, which are compared to the known expected values to evaluate the model error. The training process involves refining or adjusting the weights and biases of each layer of the network at each iteration using a process called backpropagation, until the output of the network closely correlates with expected values. In other words, the network iteratively “learns” from the input data set and progressively improves the accuracy of the output prediction.

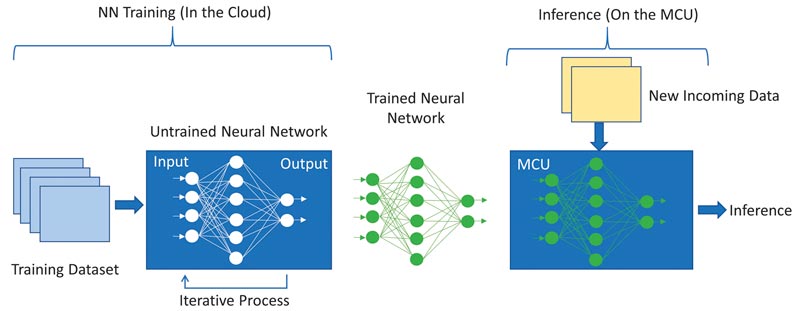

The training of the neural network requires very high compute performance and memory and is usually carried out in the cloud. After the training, this pre-trained NN model is embedded in the MCU and used as an inference engine for new incoming data based on its training.

This inference generation requires much lower compute performance than the training of the model and is thus suited for an MCU. The weights of this pre-trained NN model are fixed and can be placed in flash, thus reducing the amount of SRAM required and making this suitable for more resource constrained MCUs.

Implementation on MCUs

The AIoT implementation on MCUs involves a few steps. The most common approach is to use one of the available Neural Network (NN) framework models like Caffe or Tensorflow Lite, suitable for MCU based end device solutions. The training of the NN model for machine learning is done in the cloud by AI specialists using tools provided by AI vendors. The optimization of the NN model and integration on the MCU is carried out using tools from the AI vendor and the MCU manufacturer. Inferencing is done on the MCU using the pre-trained NN model.

The first step in the process is done completely offline and involves capturing a large amount of data from the end device or application, which is then used to train the NN model. The topology of the model is defined by the AI developer to make best use of the available data and provide the output that is required for that application. Training of the NN model is done by passing the data sets iteratively through the model with the goal to continuously minimize the error at the output of the model. There are tools available with the NN framework that can aid in this process.

In the second step, these pre-trained models, optimized for certain functions like keyword spotting or speech recognition, are converted into a format suitable for MCUs. The first step in this process is to convert it into a flat buffer file using the AI converter tool. This can optionally be run through the quantizer, in order to reduce the size and optimize it for the MCU. This flat buffer file is then converted to C code and transferred to the target MCU as a runtime executable file.

This MCU, equipped with the pre-trained embedded AI model, can now be deployed in the end device. When new data comes in, it is run through the model and an inference is generated based on the training. When new data classes come in, the NN model can be sent back to the cloud for re-training and the new re-trained model can be programmed on the MCU, potentially via OTA firmware upgrades.

There are two different ways that an MCU based AI solution can be architected. For the purpose of this discussion, we are assuming the use of Arm Cortex-M cores in the target MCUs.

In the first method, the converted NN model is executed on the Cortex-M CPU core and is accelerated using the CMSIS-NN libraries. This is a simple configuration that can be handled without any additional hardware acceleration and is suited for the simpler AI applications such as keyword spotting, vibration analysis and sensor hubs.

A more sophisticated and higher performance option involves including an NN accelerator or Micro Neural Processing Unit (u-NPU) hardware on the MCU. These u-NPUs accelerate machine learning in resource constrained IoT end devices and might support compression that can reduce power and size of the model. They support operators that can fully execute most of the common NN networks for audio processing, speech recognition, image classification, and object detection. The networks that are not supported by the u-NPU can fall back to the main CPU core and are accelerated by the CMSIS-NN libraries. In this method, the NN model is executed on the uNPU.

These methods show just a couple of ways to incorporate AI in MCU based devices. As MCUs push the performance boundaries to higher levels, closer to that expected from MPUs, we expect to start seeing full AI capabilities including lightweight learning algorithms and inference, being built directly on MCUs.

Renesas and AI

Renesas has a comprehensive family of Arm based MCUs, the RA family, that are capable of running AI applications. All RA family MCUs support Arm Cortex-M cores and a rich feature set that includes on-chip Flash and SRAM and serial communication peripherals, Ethernet, graphics/HMI and analog features. They also support advanced security with symmetric and asymmetric cryptography, immutable storage, isolation of security assets and tamper resistance.

Renesas is working closely with ecosystem partners to bring end-to-end AI solutions in predictive analytics, vision and voice applications, amongst others. These new AIoT technologies have opened up significant new opportunities for Renesas MCUs. Applications using these capabilities span market segments such as industrial automation, smart homes, building automation, healthcare and agriculture.

Renesas’s “e-AI” (embedded AI) solution uses both popular NN models, Caffe developed by UC Berkeley and TensorFlow from Google. It utilizes Deep Neural Network (DNN), a multilayered network, that is particularly suited for applications involving image classification, voice recognition or natural language processing.

The Renesas e-AI tools embedded in the e2 Studio Integrated Development Environment convert the NN model into a form (C/C++ based) that is usable by the MCU and assist in embedding the pre-trained NN model on the target MCU. This AI enabled MCU can now be deployed in IoT end devices.

AI on the Edge is the future

Implementation of AI on resource constrained MCUs will increase exponentially in the future and we will continue to see new applications and use cases emerge as MCUs push the boundary on performance and blur the line between MCUs and MPUs, and more and more “thin” NN models, suitable for resource constrained devices, become available. In the future, with an increase in MCU performance, we will likely see implementation of lightweight learning algorithms in addition to inference, being run directly on the MCU. This will open up new markets and applications for MCU manufacturers and will become an area of significant investment for them.

About the author

Kavita Char is a Senior Staff Product Marketing Manager at Renesas Electronics America. She has over 20 years of experience in software/applications engineering and product management roles. With extensive experience in IoT applications, MCUs and wireless connectivity, she is now responsible for definition and concept to launch management of next-generation Arm based high performance MCUs and solutions at Renesas.

Renesas Electronics Europe | https://www.renesas.com