In principle, the basic HMI concept is not a new one – arguably rudimentary forms of it were used as far back as the industrial revolution of the 19th Century. The Jacquard Loom and Babbage’s Difference Engine are examples from that era of where control functions were applied to machinery through human input of some description. As we entered into the computing age, mechanical keyboards would become the route via which commands could be initiated or programs put into action. Modern times have seen the emergence of touch-based display technology (most notably projected capacitive derivatives), and this has brought more advanced and intuitive HMIs to the fore. The touchscreens incorporated into MP3 players, at the start of the new millennium, proved to be a key factor in securing these gadgets’ widespread popularity. Then, when the first smartphones and tablets started to appear, they would be designed into these too. Before long, they became the mandatory mechanism via which we would interact with consumer electronics goods. Industrial controls soon followed suit, with clunky switches and dials being supplanted by sleek and far more reliable touch-based alternatives. Now there are calls for taking HMIs even further.

Though touch-enabled HMIs have many appealing qualities, there are still reasons why employing them might not always be recommended. Under certain circumstances, physical contact may be problematic. This could be due to the potential spreading of germs in clinical environments or even public places – where information terminals might be used by many people over the course of a day, without any opportunity for the touch surface to be cleaned. Also, in situations where the user’s concentration must remain fixed on something important (such as driving a vehicle or operating heavy machinery, for instance), utilising a touchscreen HMI for some secondary function could be too much of a distraction. Consequently, there is growing interest in exploring non-contact alternatives.

Though voice-control is becoming increasingly popular in within households (thanks to the widespread proliferation of digital assistants), offering a way for simple instructions to be carried out, it is not always totally applicable. This is especially true in outdoor public use, or in industrial and automotive settings, where there is likely to be substantial background noise to contend with. Incorrect commands, and subsequently rectifying them, could result in too much time being taken to get the action done and lead to annoyance. There might also be privacy considerations to factor in. So, though clearly valid in some scenarios, other methods need to be looked into.

Uptake of ToF

One option that is gaining a lot of traction is time-of-flight (ToF) technology. This provides a straightforward means of controlling electronic systems without the person involved having to divert their attention, thanks to use of just hand movements. In simple terms, infra-red (IR) pulses are emitted and when they hit an object these bounce back to be picked up via some form of sensing array. By measuring the time delay between the pulses being sent out and them being subsequently picked up again, it is possible to accurately calculate the distance of the object. Furthermore, there is provision to detect movement of the object – and from this various different gestures can be determined.

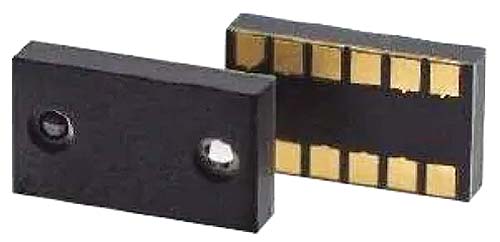

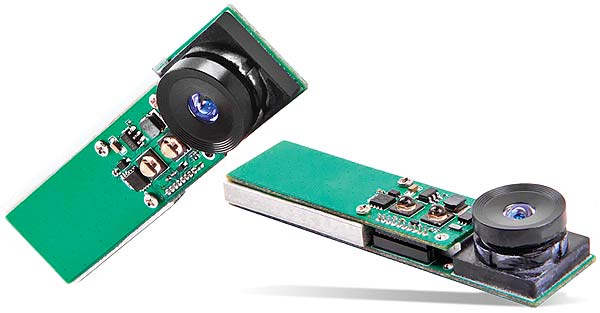

The RFD77402 from RF Digital has the capacity to handle rapid and accurate gesture recognition – with a 10Hz refresh rate and ±10% precision. Supplied in a compact 4.8mm × 2.8mm × 1.0mm surface mount package, this 3D ToF sensor module comprises a 29° field-of-illumination (FoI) 850nm VCSEL emitter, along with accompanying driver circuitry, a microcontroller unit (MCU) and an on-board memory, plus a 55° field-of-view (FoV) photosensor and appropriate optics. Captured gesture data is transferred to the adjoining system across its I2C I/O. Seeed Studio’s DepthEye 3D ToF camera module incorporates a 1/6″ format, 80×60 pixel resolution OPT8320 sensor from Texas Instruments with a 1000fps frame rate. This streamlined 60mm × 17mm × 12mm unit can connect to a laptop/tablet via its USB interface to provide gesture recognition capabilities to such hardware. It has operating system (OS) support going from Windows 7 onwards.

Based on the company’s patented FlightSense™ technology, the VL6180 from STMicroelectronics is intended for enabling gesture recognition in smartphones, tablets and domestic appliances. This three-in-one optical module (which has dimensions of 4.8mm × 2.8mm × 1.0mm) features an 850nm VCSEL emitter and a proximity sensor, along with a 16-bit output ambient light sensor (to mitigate interference from background illumination). Concentrating mainly on HMI implementation in automobile designs (though applicable to industrial automation too), Melexis’ 320×240 pixel resolution MLX75x23 image sensor and MLX75123 companion IC present engineers with a complete AEC-Q100-compliant system solution for ToF-based HMIs that ensures drivers don’t need to take their eyes off the road. This means that phone calls can be made or the infotainment system accessed without placing vehicle occupants, or other road users, in danger. Given the uncompromising application environment, a -40°C to 105°C operational temperature range is supported by these components. In addition, a high degree of optical resilience means that extreme changes in ambient light conditions can be dealt with (the system being able to cope with as much as 120lux of incident background light). Through the companion IC, regions of interest can be selected or response activation triggers set.

Potential of mmWave in HMI

Also suited to contactless gesture recognition, but without relying on optoelectronics, Texas Instruments’ IWR1642 mmWave motion sensor has the ability to capture data relating to range, velocity and angle. Through this, hand swipes (either vertically or horizontally oriented) and finger twirls can be registered. Using a 76GHz to 81GHz frequency range, the unit has a 40MHz transmitter and a low noise (-14dB) receiver. An ARM Cortex-R4F processor core takes care of front-end configuration and system calibration, while a high performance C674x DSP is responsible for all the signal processing workload. A PLL and ADCs are also integrated into this single chip solution. In addition, there is 1.75MB of memory resource available. The main attraction of using mmWave technology is that it works through materials, so there is no ‘line of sight’ dependency. This means that the sensor does not have to be exposed to the external environment (and the potential sources of damage that are present there), but can instead be located behind a protective enclosure. The low power consumption levels needed for sensing via this method are also advantageous.

Electric Field-Based HMIs

Built upon the company’s proprietary GestIC technology, Microchip’s MGC3140 controller ICs rely on quasi-static electrical near-field proximity sensing – something that is still in its infancy, but showing a great deal of promise. The controller can detect gestures at distances of up to 10cm away from the actual HMI surface. An electric field is propagated from this surface, with a DC voltage providing a constant field strength, while an AC voltage supplements with a sinusoidal varying field. Via this set-up, conductive objects (like parts of the human body) that are present in the field will cause distortions to be witnessed. The nature of the mechanism used means that this form of HMI is not in any way effected by ambient light or sound, making it suitable for use in gaming consoles, medical equipment, automotive control pillars and all sorts of domestic appliances. The MGC3140 has the capacity to support a 150dpi spatial resolution and record positions at a rate of up to 200Hz.

Other Prospects

There are a host of potential HMI manifestations currently being experimented with elsewhere in the industry. Development is currently underway on solutions that utilise ultrasound projection. Elliptic Labs’s INNER MAGIC is able to detect hand gestures (to control smart speakers and suchlike) through the company’s patented 180° FoV touch-free sensor technology. The mid-air haptics offered by Bristol-based start-up Ultrahaptics use a 256-element grid of ultrasonic transducers (along with a motion-tracking image sensor) to construct virtual HMIs that, despite their lack of physical presence, still have haptic feedback. The upshot of this is that they can emulate conventional manual controls (like buttons, sliders, etc.) without necessitating any cleaning or maintenance work. Surgeons and industrial operatives could clearly benefit for this, but there are also opportunities in retail, home automation, digital signage and automotive too.

There is no doubt that touch is still important though, and its value in relation to next generation HMI construction should not be overlooked. Only recently, engineers at Korea’s leading research institute KAIST informed the world about the progress they have made with the use of sound wave localisation sensing to generate virtual keyboards from standard smartphone handsets. This will allow walls, tables, mirrors and other everyday objects to act as touch surfaces for user manipulation, and has a considerably shorter lag than previous attempts at HMIs of this kind.

It is clear that there are a wealth of different possibilities now emerging that will enable HMIs which can overcome the constraints that characterise certain applications and delivering better user experiences. Through IR, ultrasound, mmWave and electric fields, the scope of HMI implementation is destined to expand profoundly, complementing established touch-based technologies with new and exciting developments.

Mouser Electronics

Authorised Distributor

https://ro.mouser.com