It is estimated that over 80% of all road accidents are due to the driver being distracted in some way. The growing use of mobile phones worldwide, especially texting or taking a call while driving, has significantly increased the risk of accidents. In the United States alone, the Department of Transportation reported that mobile phones are involved in 1.6 million motor vehicle crashes each year, causing half a million injuries and 6,000 deaths annually.

How can we, as design engineers, help make our vehicles and roads safer? Is the answer enforcing better driver training standards? Laws banning talking or texting while driving clearly don’t seem to be enough to deter people.

Are humans just too stubborn that we have to make our vehicles smarter instead?

The ongoing development of advanced driver assistance system (ADAS) technology is seen as one of the primary solutions for making our roads safer. With improvements introduced in the supporting sensor mechanisms and reductions being witnessed in the associated deployment costs, ADAS has been able to proliferate beyond luxury cars into medium and even lower end vehicles.

According to Global Market Insights, the ADAS market is estimated to grow from USD 28.9 billion in 2017 to USD 67 billion by 2024 – more than doubling in a period of just 7 years. The firm also noted that stringent government regulations pertaining to vehicle safety, including the mandatory fitting of safety technologies (like autonomous emergency braking and parking sensors), will further spur ADAS growth.

Giving ADAS a 360° View of the Road

ADAS is able to make use of combinations of different sensor technologies – infra-red (IR), ultrasonic, radar, image sensors, LiDAR and suchlike – in order to automate dynamic driving tasks, such as steering, braking and acceleration. In a market trends study published last summer, Gartner forecast that automotive image sensors will represent USD 1.8 billion worth of annual business by 2022 and this will be driven, if you pardon the pun, predominantly by ADAS.

The development of better cameras was key to bringing the cost of ADAS down and thereby making it more affordable for the mass market. As the main sensor resource for ADAS today, cameras are widely used in front-facing or outward-facing applications, as well as becoming increasingly common in driver-facing systems. Front-/outward-facing cameras can perform numerous functions. The most notable of these are lane departure alerts, vehicle proximity monitoring, traffic sign recognition, park assist, rear-view mirror replacement, blind spot detection and obstacle/pedestrian recognition. Conversely, driver-facing implementations focus on ensuring that the driver is able to make critical decisions (or if instead the ADAS will need to apply the brakes, carry out an evasive manoeuvre, etc.). Here monitoring for fatigue (blink sensing) and distraction (direction that the driver’s head is facing) are the key tasks.

To improve the reliability of ADAS technologies, electronic component manufacturers such as ON Semiconductor are developing image sensors that perform well in very bright or low light conditions. The AR0230AT is a 1/2.7” format CMOS device with a 1928×1088 active-pixel array that captures images in either linear or high dynamic range (HDR) modes with a rolling-shutter readout. It supports both video and single frame operation, and includes camera functions such as in-pixel binning and windowing. Designed for both low light and HDR scene performance, this energy-efficient image sensor is programmable through a simple two wire serial interface.

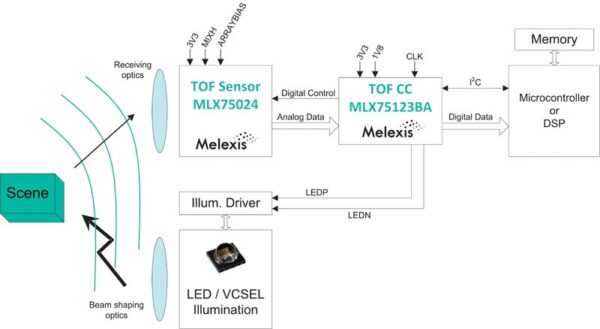

Aimed at driver-facing implementations, Melexis’ MLX75x23 arrays provide a complete time-of-flight (ToF) 3D imaging solution. They feature 320×240 QVGA resolution ToF pixels based on DepthSense pixel technology and exhibit strong sunlight robustness. The associated AEC-Q100-qualified MLX75123 companion chip controls the ToF sensor and the illumination unit, as well as streaming data to the host processor. These chipsets offer performance, flexibility, and design simplicity in a very compact 3D camera.

Teaching Cars to See Better than Humans

Sensors detecting objects around the vehicle need to work together with object identification/classification technologies.

Generally these consist of processors powered by machine or deep learning that enable the vehicle’s ADAS to effectively recognize movement, patterns, people, other vehicles, street signs and potential obstacles. One example is NXP Semiconductors’ S32V234 vision and sensor fusion processor IC, which is designed to support computation-intensive image processing applications. It incorporates an embedded image sensor processor, a powerful 3D graphic processing unit, dual APEX-2 vision accelerators, plus integrated security. Part of the NXP SafeAssure program, this device is suitable for ISO 26262 ASIL B functional safety compliant ADAS applications, such as pedestrian detection, lane departure warning, smart head beam control and traffic sign recognition. The processor integrates four 64-bit ARM Cortex-A53 cores running at up to 1GHz with a NEON co-processor and an ARM Cortex-M4 CPU. The Cortex-M4 allows automotive operating systems to interface with external devices separate from the CPU.

Similarly, the TDA3x SoC series from Texas Instruments comprises highly optimized and scalable devices designed to meet ADAS requirements. These SoCs possess an optimal mix of low power operation, elevated performance (with up to 745MHz signal processing throughput), smaller form factors and ADAS vision analytics processing that strives towards greater vehicle autonomous. Supporting Full-HD video (1920×1080 resolution at 60fps), they enable sophisticated embedded vision functions like surround view, front camera, rear camera, radar and sensor fusion on a single scalable architecture.

Automotive Radar

Smarter microcontroller units (MCUs) that power long range, high resolution radar will be key to developing the next generation of safety-critical systems. NXP’s S32Rx radar MCUs are 32-bit devices that meet the high performance computation demands required by modern beam-forming fast chirp modulation radar systems, featuring radar I/F and processing, plus dual e200z cores and capacious system memory. Available in AEC-Q100 grade 1 and 257 MAPBGA packages, they are designed for applications like adaptive cruise control, autonomous emergency braking and rear traffic crossing alert.

Designing a Future for Fully Autonomous Vehicles

Although there is a lot of hype about self-driving cars currently, it must be remembered that we are still in the early stages of this technology. The Society of Automotive Engineers (SAE) has defined five levels of automation – with level 0 meaning no automation whatsoever and level 5 being fully autonomous. Today’s state-of-the-art vehicles when it comes to having a degree of autonomy (using Tesla’s Autopilot feature, for example) are still only at SAE level 2.

Although there have been significant advances for image sensing and object recognition technologies over the past decade, we are a long way from fully autonomous vehicles yet. It is estimated that it will be at least another 15 years before SAE level 5 is implemented into the average car. However, the ambitious timelines of OEMs for achieving high degrees of automated driving are speeding up deployment of the ADAS components that underpin this technology.

Volkswagen, Mobileye and Champion Motors recently announced a joint venture to deploy a fully driverless ride-hailing vehicle service in Israel – with limited rollout scheduled for 2019, followed by full-scale operation in 2022. Volkswagen will provide an electric vehicle platform into which Mobileye will integrate turnkey software and hardware technologies to enable automation, while Champion Motors will handle fleet management and maintenance aspects. ABI Research forecasts that in 2025, the automotive industry will ship 8 million consumer vehicles that feature SAE level 3 and 4 technologies – where drivers will still be necessary, but are able to completely shift safety-critical functions to the vehicle under certain conditions.

The speed at which fully autonomous vehicles will enter the mainstream market will depend on whether society feels safe in cars where nobody is at the wheel. Complex legal concerns also need to be resolved about who (or what) would be held accountable when an accident happens where an autonomous vehicle is involved. The road ahead is one with plenty of uncertainties, but it is clear the days of human control being an integral part of the driving experience will soon be behind us.

Mouser Electronics

Authorised Distributor

www.mouser.com